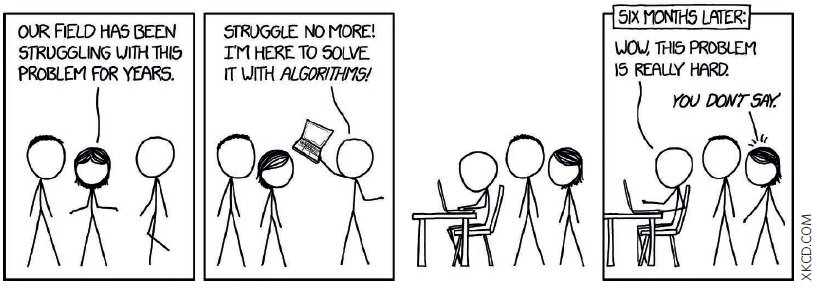

Building machine learning models for ADMET (Absorption, Distribution, Metabolism, Excretion, and Toxicity) predictions puts you on the front lines of drug discovery. It’s exciting work—pushing the boundaries of what’s possible, using algorithms to predict how molecules behave in the body. But the part they don’t tell you? The real battle isn’t in designing models or tuning hyper parameters.

It’s in the data—the messy, stubborn, inconsistent data that refuses to cooperate. The work of data cleaning and curation isn’t glamorous. There are no applause-worthy moments when you finally fix a mislabeled column or track down a missing unit. But here’s the hard truth: no matter how sophisticated your model is, it’s only as good as the data you feed it. Bad data guarantees bad predictions. Period.

So if you’ve ever found yourself frustrated—neck-deep in mismatched datasets, experimental noise, and duplicates—you’re not alone. Every ADMET researcher has been there. Let’s get into the weeds of what makes this process so difficult and why it’s also the most important part of building reliable ADMET models.

Why ADMET Data Is So Messy

ADMET data doesn’t follow the clean, structured patterns you might find in finance or weather forecasting. Biological data comes from many labs, each with its own methods, systems, and reporting standards. Even small inconsistencies—different scales, units, or experimental conditions—can ruin your predictions if left unchecked. And because ADMET datasets are often smaller than those in fields like NLP or computer vision, there’s not much room for error.

Here are some of the most common challenges you’ll face when working with ADMET data and how they can derail even the best models.

1. Inconsistent Units and Scales

It’s amazing how something as small as a unit mismatch can throw your dataset into chaos. Labs measure the same properties—like solubility or clearance—using different units (mg/mL vs. µg/mL) or on varying scales (log-transformed vs. linear). If you don’t catch these discrepancies early, you’ll end up comparing apples to oranges.

I once spent days debugging a model that felt off, only to discover that half the dataset had been reported in log scale, while the other half was in linear scale. It took some time to cross-check conversion tables and redoing calculations, and it wasn’t exciting work. But it had to be done—because good models aren’t just built on algorithms, they’re built on clean, consistent data.

2. Experimental Variability Across Labs

Even when two labs measure the same property, their results rarely match perfectly. Equipment, protocols, and environmental factors all introduce variability. One lab might report a 10-minute clearance time for a compound, while another reports 20 minutes for the same experiment. Neither is necessarily wrong—they just measured under slightly different conditions.

Standardizing datasets to account for variability is essential. This often means filtering out outliers, identifying trends, and adjusting for known biases. It’s a slow, methodical process, but if you skip it, your predictions will be unreliable.

3. Missing or Ambiguous Metadata

One of the biggest challenges in ADMET data is the absence of critical metadata. A solubility value without context—like the pH at which it was measured—might as well be useless. Similarly, permeability measurements without knowing whether they came from Caco-2 cells or another system will send your predictions off the rails.

When metadata is missing, you’re left making educated guesses. And guesswork can be dangerous in ADMET modeling. Without complete context, even the most accurate algorithms can’t compensate for mismatched or incomplete data. It’s frustrating, but learning to ask the right questions about your data—and knowing when to cut your losses—is part of the job.

4. Duplicates and Conflicting Data

Aggregating data from multiple sources always introduces duplication issues. It’s not uncommon to encounter the same compound with slightly different values across datasets. The tricky part is deciding what to keep. Do you average the values? Use the most recent report? Prioritize high-impact journals over lesser-known ones?

There are no one-size-fits-all answers here. The choices you make—what you keep, what you discard—shape your model’s performance. Over time, you learn which data to trust through validation and experience. Mistakes will happen, but that’s okay. The key is to reflect, adjust, and improve your process with each iteration. A good model isn’t just built—it’s refined step by step.

5. Predicted Data Masquerading as Experimental

Here’s one you don’t expect: datasets filled with in-silico predictions disguised as experimental results. It’s an easy trap to fall into, and it can wreck your model by introducing bias and overfitting. I’ve learned (the hard way) to cross-reference the datasets I use. If a result feels too “clean,” it’s worth double-checking whether it’s genuinely experimental data. These predictions often slip into databases without clear labeling, posing as experimental data.

Bad inputs guarantee bad outputs—it’s that simple. Taking the time to validate your data sources may feel tedious, but it’s critical. Skipping this step is a shortcut to failure.

Data Curation: A Constant Loop, Not a Single Step

Curation isn’t a task you complete once and move on from—it’s a loop. You clean the data, build a model, test it, and almost always discover something you missed. Back to the spreadsheets you go, tracking down errors, reformatting, and filtering. It’s a repetitive process, and sometimes it feels like it will never end. But every iteration gets you closer to a reliable model.

I once built a model that looked solid—until I realized several structural duplicates were skewing the results. It was frustrating, but it’s part of the process. A good ADMET model doesn’t come from shortcuts. It comes from embracing the grind and getting comfortable with the iterative nature of data work.

For a deeper dive into the data curation process, check out my earlier blog post on MDCK data curation, where I tackle some specific challenges and solutions that can apply across the board.

Don’t Ignore the Chemistry

Data cleaning goes beyond just experimental values; chemical structures are often riddled with errors. It’s not unusual to find inconsistently drawn molecules, missing stereochemistry, or incorrect functional groups. I’ve encountered several cases of flawed structures not only in databases but also in the original papers. Feeding inaccurate structures into your model guarantees poor predictions.

Tools like RDKit and ChemAxon are invaluable for standardizing chemical structures and identifying issues such as tautomers or salts. However, no tool can substitute for manual inspection. With time, you’ll develop the ability to spot these errors, but it requires patience and practice.

Knowing When to Let Go

Not every data point is worth saving. Some are too noisy, others are incomplete, and a few will drag your model’s performance down. One of the hardest lessons in ADMET modeling is knowing when to walk away from bad data.

Early on, I used to hoard weird outliers, thinking, “What if this one unlocks a key insight?” Spoiler: it never did. Bad data, like bad relationships, will only hold you back. The sooner you learn to cut your losses, the better your model will be.

Final Thoughts: Embrace the Grind

Here’s the reality: 80% of ADMET modeling is data curation. The machine learning part? That’s the easier bit. Clean, well-organized data is the foundation of every good model. Without it, even the most advanced algorithms are worthless.

If you’re deep in spreadsheets, feeling frustrated, and wondering if it’s worth it—hang in there. Every mistake you catch and every inconsistency you correct brings you closer to a model you can trust.

Looking Ahead: Streamlining the Process

The good news? It doesn’t always have to feel like an uphill battle. In future posts, I’ll share practical ways to automate parts of the data cleaning process and make life easier, including tutorials on how to mine data from public databases and literature sources using tools and workflows in an automated manner. Tools like Python scripts and RDKit workflows can handle some of the heavy lifting, freeing you up to focus on building ML models. For instance, you can learn how to scrape FDA drug approval data with Python to kickstart your data collection.

The grind never really ends, but you’ll get better at navigating it—and that makes all the difference.

If you’re interested in visualizing your data, check out my guide on plotting bar charts with chemical structures, or learn how to merge multiple datasets with Pandas and Python for more streamlined data management. Additionally, for those who want to enhance their data representation skills, don’t miss my post on molecular substructure highlighting with RDKit.

Here are the additional links for easy reference:

How to Merge Multiple Datasets with Pandas and Python

How to Scrape FDA Drug Approval Data with Python

Molecular Substructure Highlighting with RDKit

How to Plot Bar Charts with Chemical Structures

Thank you for reading this far! I hope you find it useful. See you soon!